Cycle-Interactive Generative Adversarial Network for Robust Unsupervised Low-Light Enhancement

Abstract

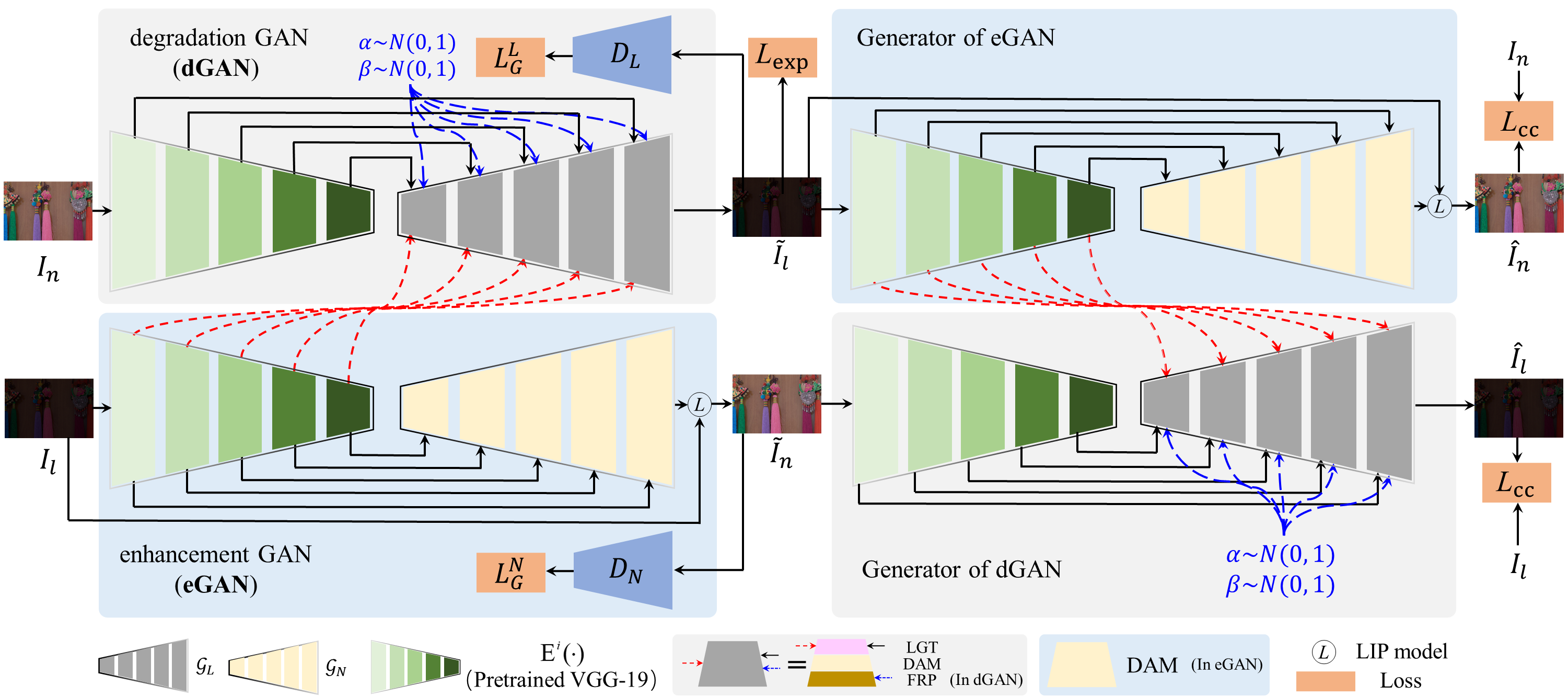

Getting rid of the fundamental limitations in fitting to the paired training data, recent unsupervised low-light enhancement methods excel in adjusting illumination and contrast of images. However, for unsupervised low light enhancement, the remaining noise suppression issue due to the lacking of supervision of detailed signal largely impedes the wide deployment of these methods in real-world applications. Herein, we propose a novel Cycle-Interactive Generative Adversarial Network (CIGAN) for unsupervised low-light image enhancement, which is capable of not only better transferring illumination distributions between low/normal-light images but also manipulating detailed signals between two domains, e.g., suppressing/synthesizing realistic noise in the cyclic enhancement/degradation process. In particular, the proposed low-light guided transformation feed-forwards the features of low-light images from the generator of enhancement GAN (eGAN) into the generator of degradation GAN (dGAN). With the learned information of real low-light images, dGAN can synthesize more realistic diverse illumination and contrast in low-light images. Moreover, the feature randomized perturbation module in dGAN learns to increase the feature randomness to produce diverse feature distributions, persuading the synthesized low-light images to contain realistic noise. Extensive experiments demonstrate both the superiority of the proposed method and the effectiveness of each module in CIGAN.

Cycle-Interactive Generative Adversarial Network for Image Enhancement

Experimental Results

Quantitative Comparison

|

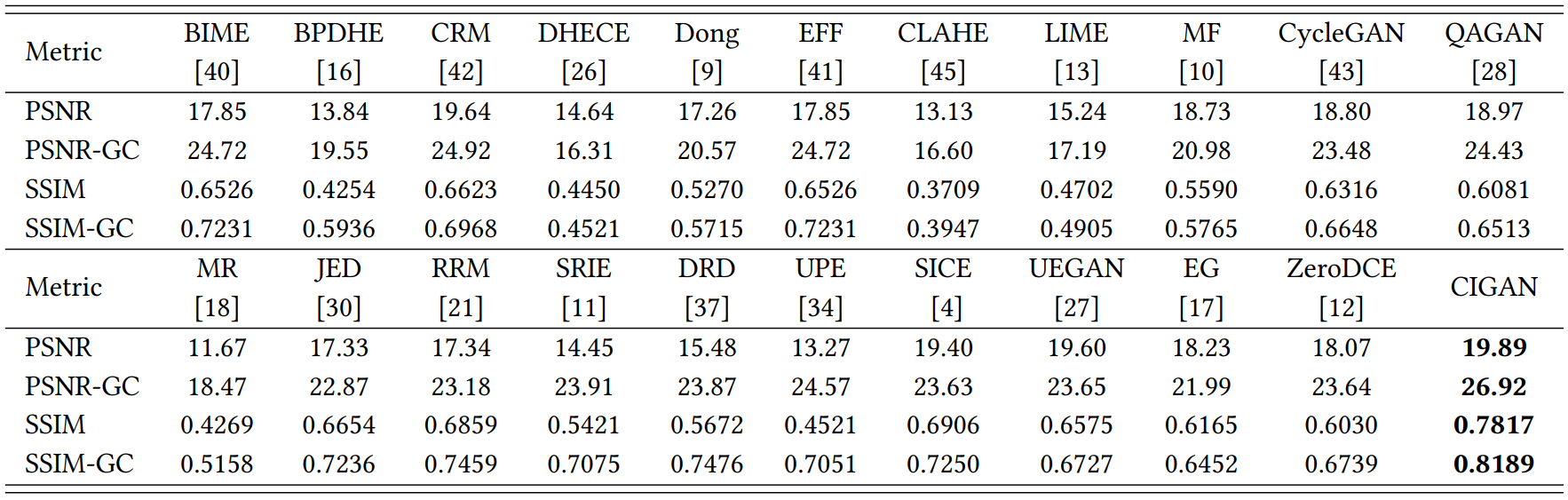

Table. 1. Quantitative comparisons of different methods on real low-light test images in LOL-V2 dataset

|

Table 1 compares the proposed CIGAN with the classical and state-of-the-art methods on LOL-V2 dataset. It can be observed that the proposed method outperforms all previous methods in the comparison because it consistently achieves the highest scores in terms of PSNR, PSNR-GC, SSIM, and SSIM-GC. This reveals that the proposed CIGAN is much more effective in illumination enhancement, structure restoration, and noise suppression. From Table 1, we can see that the proposed method is significantly superior to other state-of-the-art unsupervised methods (i.e., CycleGAN, EnlightenGAN, and ZeroDCE). This is because, on one hand, dGAN makes the attributes of synthesized low-light images consistent with those of real ones, and on the other hand, eGAN is able to restore high-quality normal-light images. Another interesting observation is that the proposed CIGAN even achieves better performance than leading supervised methods (i.e., DRD, UPE, and SICE) trained on a large number of paired images. It is worth noting that the larger PSNR gap between with and without Gamma correction shows that our method can effectively remove intensive noise and restore vivid details.

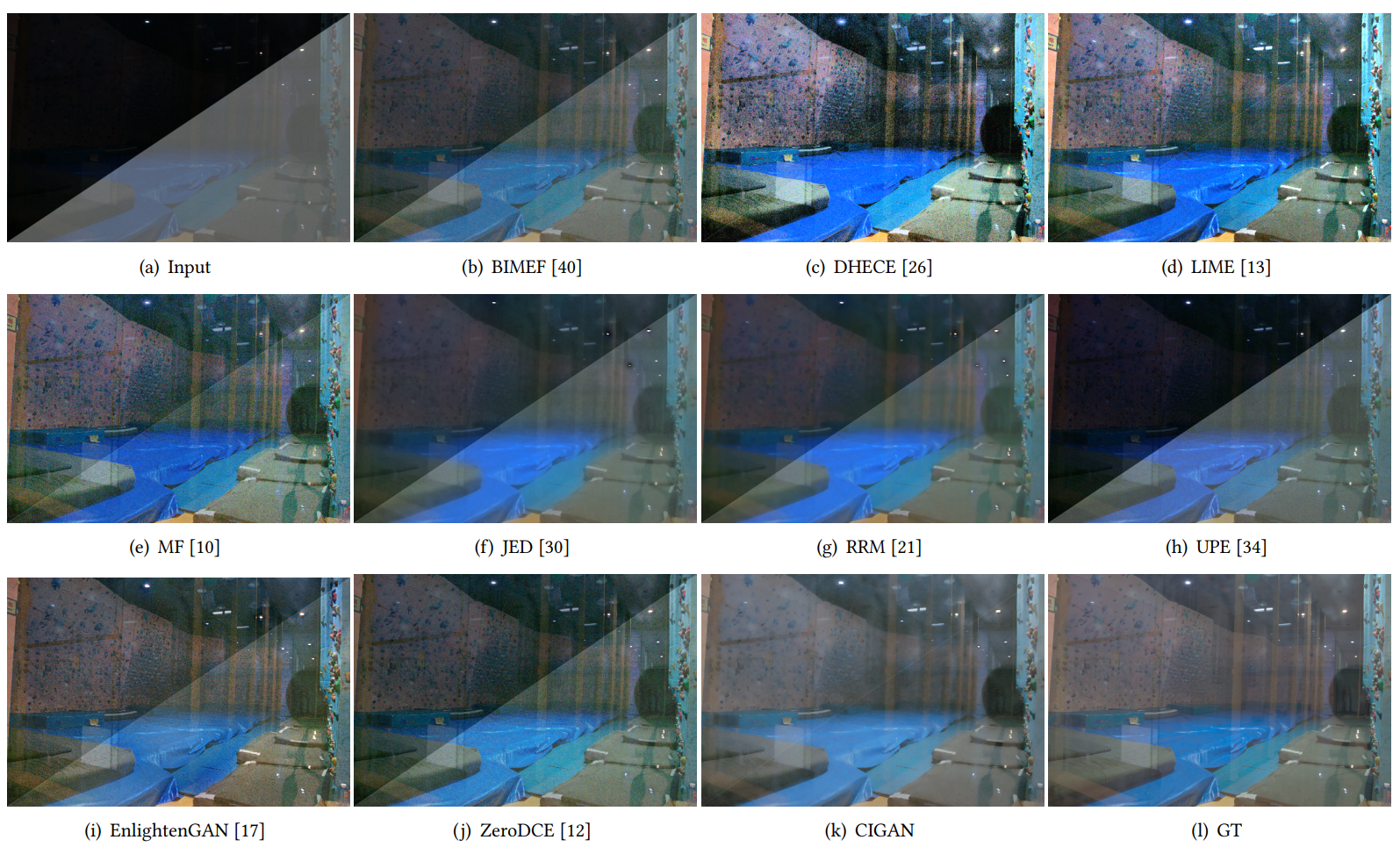

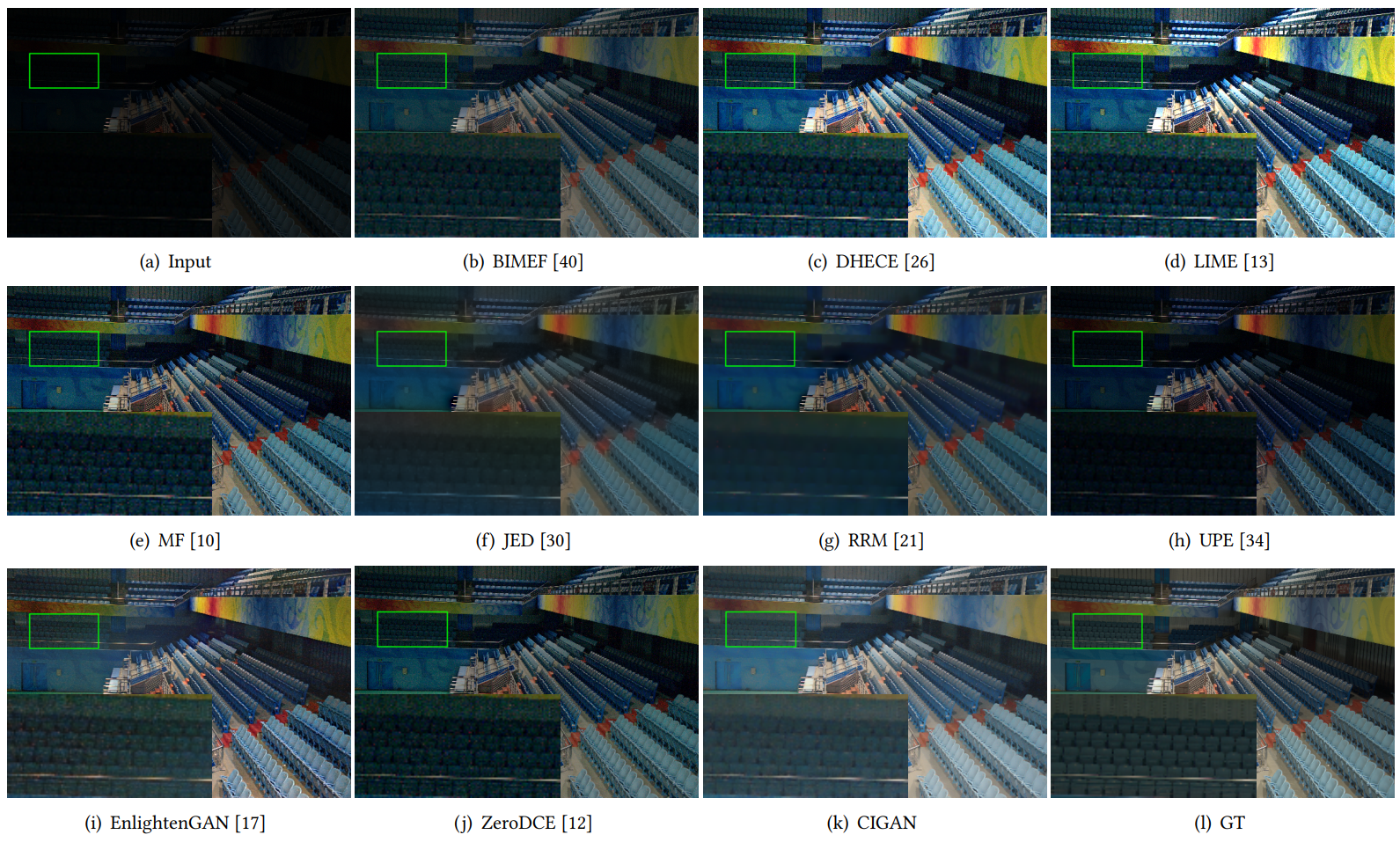

Qualitative Comparison

Download

If you use the code in your research, we kindly ask that you reference this website and our paper listed below.

Zhangkai Ni, Wenhan Yang, Lin Ma, Shiqi Wang, and Sam Kwong, "Cycle-Interactive Generative Adversarial Network for Robust Unsupervised Low-Light Enhancement," In Proceedings of the 30th ACM International Conference on Multimedia (ACM Multimedia), October 2022. (accepted) Paper | Code (coming soon) | BibTex

@inproceedings{ni2022cycle,

title={Cycle-Interactive Generative Adversarial Network for Robust Unsupervised Low-Light Enhancement},

author={Ni, Zhangkai and Yang, Wenhan and Wang, Hanli and Wang, Shiqi and Ma, Lin and Kwong, Sam},

booktitle={Proceedings of the 30th ACM International Conference on Multimedia},

pages={},

year={2022}

}

References

|

CIGAN

|

Zhangkai Ni, Wenhan Yang, Lin Ma, Shiqi Wang, and Sam Kwong, "Cycle-Interactive Generative Adversarial Network for Robust Unsupervised Low-Light Enhancement," In Proceedings of the 30th ACM International Conference on Multimedia (ACM Multimedia), October 2022. (accepted)

|

|

BIMEF

|

Zhenqiang Ying, Ge Li, and Wen Gao. 2017. A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv preprint arXiv:1711.00591 (2017).

|

|

DHECE

|

Keita Nakai, Yoshikatsu Hoshi, and Akira Taguchi. 2013. Color image contrast enhacement method based on differential intensity/saturation gray-levels histograms. In Proceedings of the International Symposium on Intelligent Signal Processing and Communication Systems. 445–449.

|

|

LIME

|

Xiaojie Guo, Yu Li, and Haibin Ling. 2016. LIME: Low-light image enhancement via illumination map estimation. IEEE Transactions on image processing 26, 2 (2016), 982–993.

|

|

MEF

|

Xueyang Fu, Delu Zeng, Yue Huang, Yinghao Liao, Xinghao Ding, and John Paisley. 2016. A fusion-based enhancing method for weakly illuminated images. Signal Processing 129 (2016), 82–96.

|

|

JED

|

Xutong Ren, Mading Li, Wen-Huang Cheng, and Jiaying Liu. 2018. Joint enhancement and denoising method via sequential decomposition. In Proceedings of the IEEE International Symposium on Circuits and Systems. 1–5.

|

|

RRM

|

Mading Li, Jiaying Liu, Wenhan Yang, Xiaoyan Sun, and Zongming Guo. 2018. Structure-revealing low-light image enhancement via robust retinex model. IEEE Transactions on Image Processing 27, 6 (2018), 2828–2841.

|

|

UPE

|

Ruixing Wang, Qing Zhang, Chi-Wing Fu, Xiaoyong Shen, Wei-Shi Zheng, and Jiaya Jia. 2019. Underexposed photo enhancement using deep illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 6849–6857.

|

|

EnlightenGAN

|

Yifan Jiang, Xinyu Gong, Ding Liu, Yu Cheng, Chen Fang, Xiaohui Shen, Jianchao Yang, Pan Zhou, and Zhangyang Wang. 2021. EnlightenGAN: Deep Light Enhancement Without Paired Supervision. IEEE transactions on image processing 30 (2021), 2340–2349.

|

|

ZeroDCE

|

Chunle Guo, Chongyi Li, Jichang Guo, Chen Change Loy, Junhui Hou, Sam Kwong, and Runmin Cong. 2020. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1780–1789.

|

Contact

If you have any questions, please feel free to contact Dr. Zhangkai Ni .

Last update: Jul., 2022